Understanding and Mitigating Hallucinations in Large Language Models (LLMs): A Guide to Accurate AI

October 9, 2024

Introduction

In the last few years, we have begun to witness the widespread adoption of AI technology and Large Language Models (LLMs) like GPT-3 and its successors have made remarkable strides in generating coherent and contextually relevant text. However, these models sometimes produce outputs that contain false, misleading, or nonsensical information—phenomena commonly referred to as "hallucinations." This article explores why LLMs hallucinate, the causes behind these hallucinations, how to detect them, and strategies to mitigate their occurrence. Furthermore, we introduce the benefits of using multiple models in reducing hallucination and highlight AnyModel, an innovative platform that facilitates these comparisons.

What Are Hallucinations?

LLMs aren't sentient beings with imaginations. Their hallucinations stem from the way they learn and process information. Hallucinations occur when a result produced by an LLM deviates from factual accuracy, often blending truths with spurious information. These erroneous results can be very misleading to users, as they often seem plausible and will be presented authoritatively. As the technology matures and users begin to rely on the result produced by AI, this problem will become increasingly acute.

Causes of Hallucinations

- Limited training data: If the training dataset is incomplete, biased, or lacks relevant examples, the model may generate fictional information to fill the gaps.

- Training Data Biases: The vast datasets used to train LLMs can contain biases and inaccuracies. These biases can be reflected in the model's outputs, leading to biased or false information.

- Lack of real-world understanding: LLMs process text patterns rather than having true comprehension.

- Lack of domain knowledge: LLMs do not possess the same level of domain-specific knowledge as a human expert, leading to inaccuracies or fabrications.

- Overfitting: When a model is too complex or overly specialized to a particular dataset, it may generate unrealistic outputs.

- Insufficient context: Limited input information can lead to incorrect assumptions.

- Probability-based generation: LLMs predict the most likely next word, which doesn't always align with factual accuracy.

- Overconfidence: LLMs can present fabricated information confidently owing to their design to produce fluent, persuasive text, which can be misleading for users who might assume correctness due to the authoritative tone.

- Temperature settings: Higher temperature values increase creativity but also the risk of hallucinations.

Recognizing Hallucinations: Red Flags to Watch Out For

Detecting LLM hallucinations requires careful scrutiny. Look out for these telltale signs:

- Unsourced Claims: If an LLM makes a factual claim without providing a source or evidence, treat it with skepticism.

- Logical Inconsistencies: Pay attention to contradictions or nonsensical statements within the generated text.

- Unusual or Unrealistic Information: Be wary of information that seems too good to be true, contradicts common knowledge, or feels out of place.

Combating Hallucinations: Strategies for Greater Accuracy

While completely eliminating hallucinations is an ongoing challenge, these strategies can help:

- Provide Clear and Specific Prompts: The more context and guidance you give an LLM in your prompts, the more likely it is to generate accurate and relevant responses.

- Cross-Reference Information: Always verify information from LLMs with trusted sources before relying on it.

- Fine-tuning models: Train models on domain-specific data to improve accuracy.

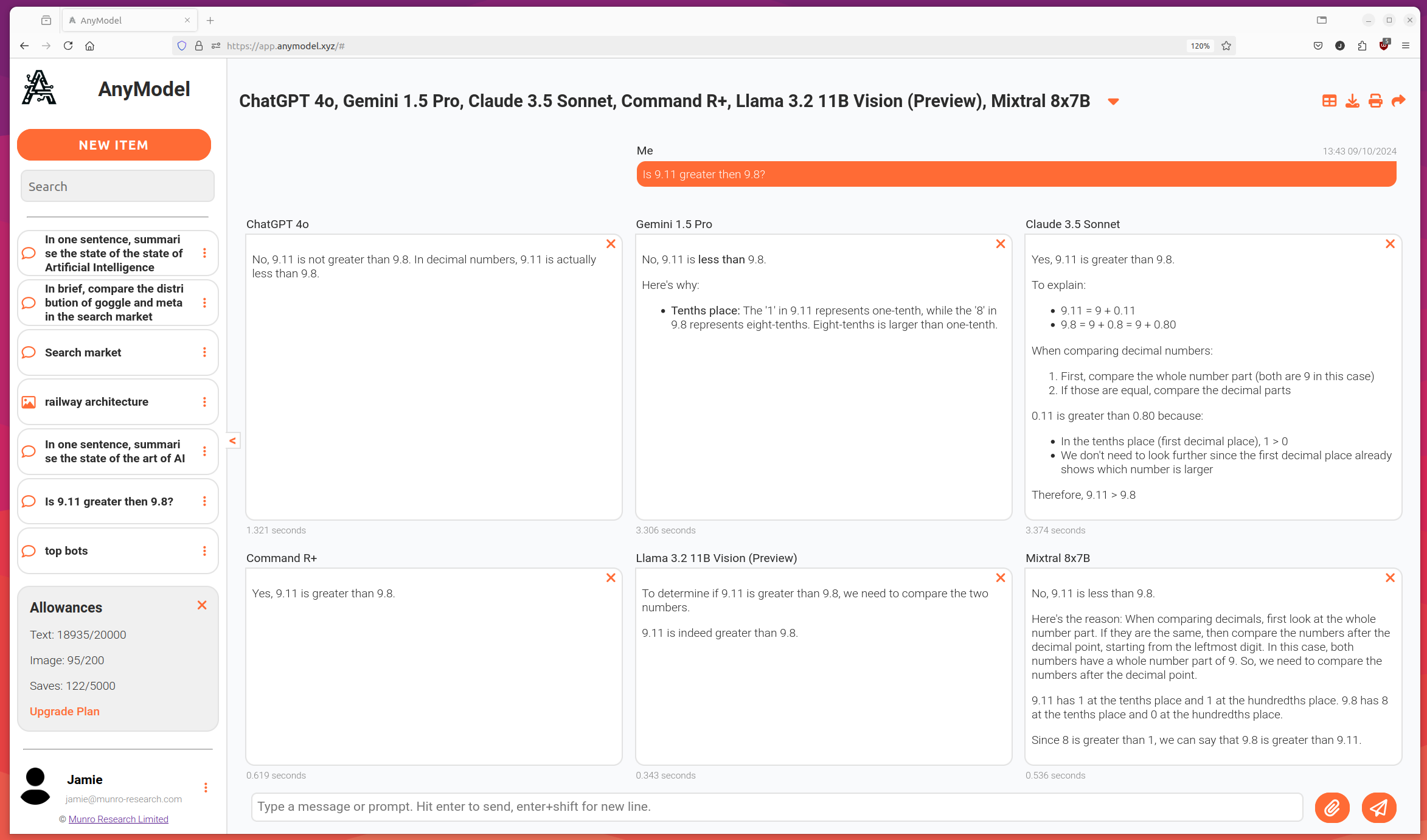

- Leveraging Multiple Models: By comparing outputs from different models, users can identify discrepancies and choose the most accurate response. This approach diminishes the risk of relying on erroneous outputs due to a single model's limitations.

The Power of Multiple Models

One of the most effective ways to detect and reduce hallucinations is by using multiple LLMs and comparing their outputs. This is where the power of comparing and contrasting comes into play. By running the same prompt through multiple LLMs, you can identify inconsistencies and potential hallucinations. If models provide different answers, further research is needed to determine accuracy. Mitigate the limitations of a single model by leveraging the strengths of multiple models:

- Increased accuracy: Different models may have varying strengths and weaknesses, leading to more comprehensive results.

- Reduced hallucination exposure: Discrepancies between model outputs can highlight potential inaccuracies.

- Diverse perspectives: Multiple models can provide a range of interpretations and solutions.

- Confidence assessment: Consistent responses across models can indicate higher reliability.

Introducing AnyModel

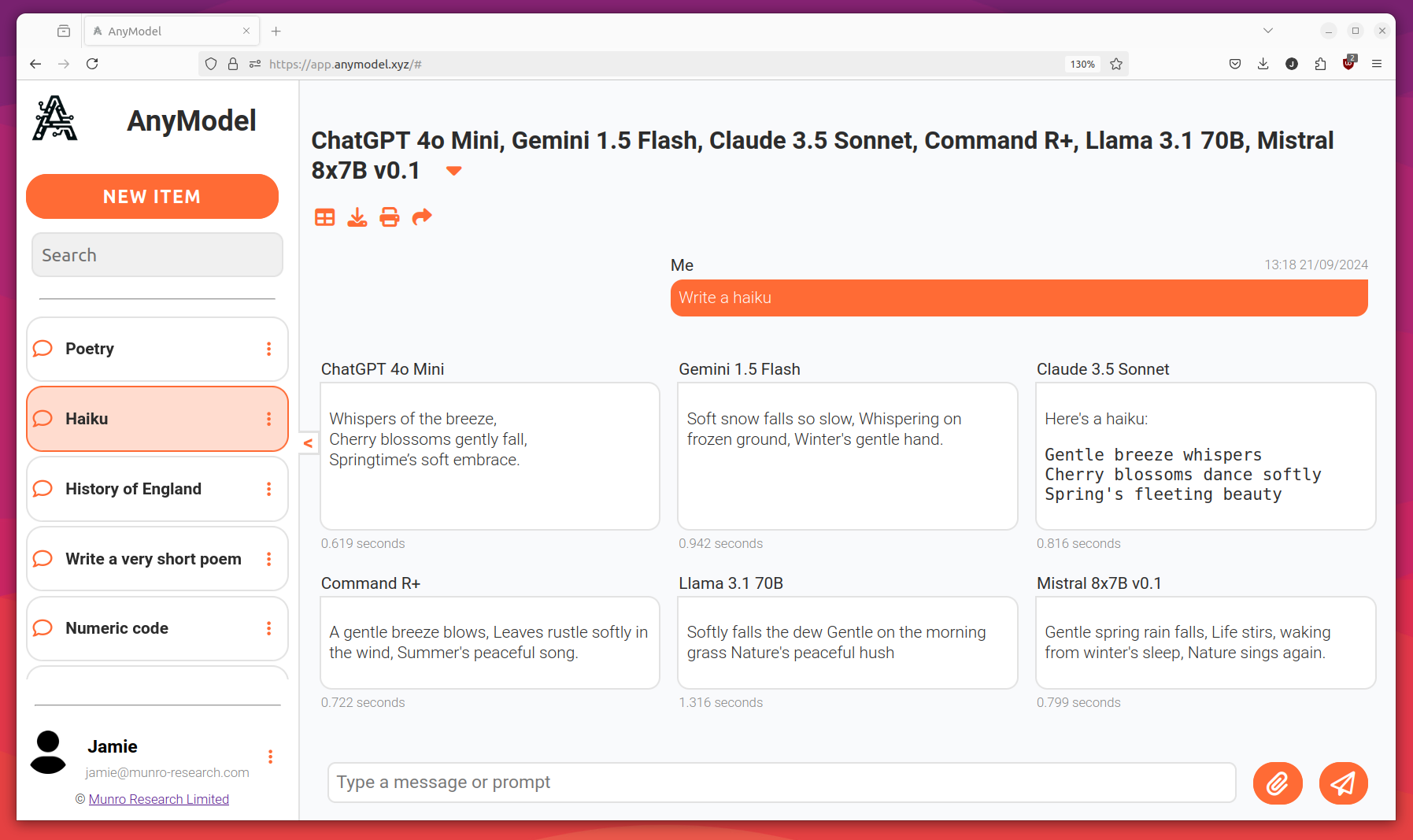

AnyModel is an all-in-one AI platform that provides access to a curated library of LLMs from a single account and subscription. With AnyModel, you can easily compare the outputs of multiple models side-by-side, detecting potential hallucinations and improving the accuracy of your applications. The platform offers:

- Comprehensive Library: Access a diverse range of LLMs, each providing unique perspectives and strengths, from a single platform.

- Ease of Comparison: The side-by-side comparison feature aids in scrutinizing different models' outputs, promoting accuracy and consistency.

- Centralized Access and Management: No need to juggle multiple accounts and subscriptions. All your AI needs are met by a single account and platform.

- User-friendly interface: Seamlessly switch between models and analyze results efficiently.

Conclusion

LLM hallucinations are a significant limitation of language models, but by understanding their causes, detection techniques, and prevention methods, you can develop more accurate and reliable applications. Using multiple models and comparing their outputs is a powerful technique to detect and reduce hallucinations, and AnyModel offers an innovative solution to streamline this process. By leveraging the strengths of multiple models, you can build more robust and trustworthy AI applications. Try AnyModel today for free and discover the benefits of multi-model comparison.

Meta Description

Discover why Large Language Models (LLMs) hallucinate, the causes behind it, and techniques to detect and mitigate these issues. Learn how using multiple AI models and platforms like AnyModel can help ensure more accurate AI-generated content.

Keywords: LLM hallucinations, Large Language Model hallucinations, Causes of LLM hallucinations, Detection of LLM hallucinations, Prevention of LLM hallucinations, Comparing AI, Comparing AI models, AI comparison, Multi-model comparison, Model comparison platform, compare AI side-by-side, AnyModel, Language model accuracy, LLM limitations, Hallucination detection techniques, LLM evaluation.